Presence detection human only#

This presence detector was generated from Presence detection (sparse). The purpose of this version of presence detector is to remove false detections created from non-human moving objects such as fans, plants, curtains and other possible objects in a small room. This presence detector works by comparing the fast motion score and slow motion score. Our investigation shows that the human creates high spikes in both fast and slow motion score, while moving objects create high spikes in slow motion score only. The key concepts for this presence detector are:

Comparing the fast and slow motion score to individually set thresholds

Implementation of adaptive threshold using fast motion guard interval

Implementation of fast motion outlier detection for quiet room (e.g. meeting room)

Slow motion and fast motion#

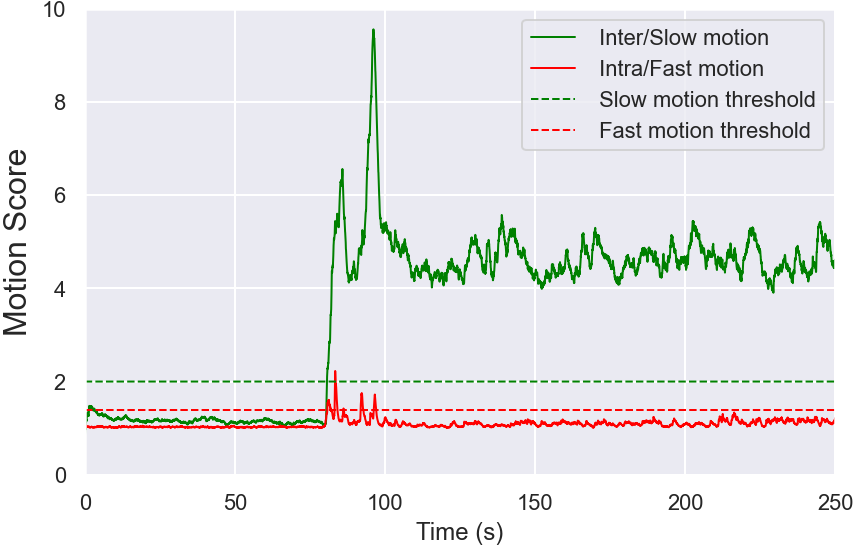

Below is an example of the detections of both fast and slow motions. It is measured in a room with size of approximately 3 x 3 x 3 m. The following events are:

0-80 seconds is empty room

80-100 seconds is people coming, installing and turning on a fan

100-250 seconds is empty room with a fan turned on

Figure 76 A measurement with multiple cases of human and fan presence#

The Figure 76 can not be seen directly in exploration tool GUI, but was used in the algorithm development. From this we conclude:

The inter/slow motion is measuring human presence from, e.g., breathing

The intra/fast motion is measuring bigger movements from the human body

Fast motion score, \(s_\text{fast}(f)\), and slow motion score, \(s_\text{slow}(f)\), are calculated from the \(\bar{s}_\text{fast}(f, d)\) and \(\bar{s}_\text{slow}(f, d)\) by taking the maximum value over the distances. The \(\bar{s}_\text{fast}(f, d)\) that is \(\bar{s}_\text{intra}(f, d)\) and the \(\bar{s}_\text{slow}(f, d)\) that is \(\bar{s}_\text{inter}(f, d)\) are processed from the Presence detection (sparse) processor. The motion scores are then compared to fast motion threshold, \(v_f\), and slow motion threshold, \(v_s\), to obtain fast motion detection, \(p_f\), and slow motion detection, \(p_s\). Lastly, the decision of presence detected, \(p\), is defined as either fast motion detection or slow motion detection.

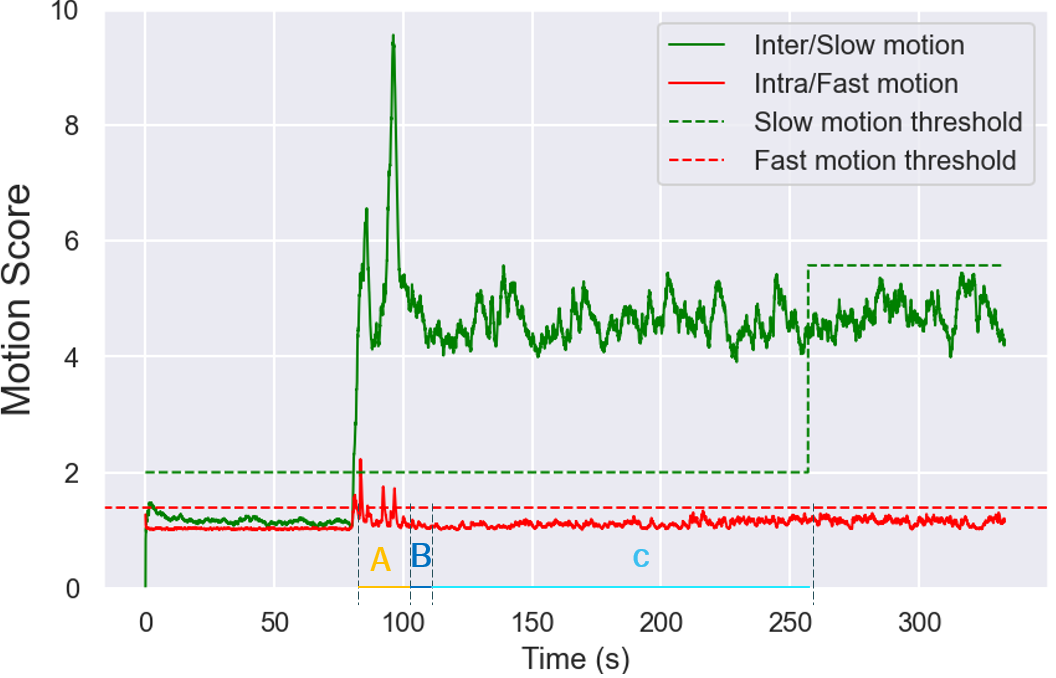

Adaptive threshold#

Adaptive threshold is one of the configurations to remove false detections created by fans, plants, and curtains. As mentioned before, those objects creates mainly slow motions. The adaptive threshold works by recording the maximum value of slow motion score for each distance during a recording time. We use these recorded values as a new threshold for each distance with the expectation that the recorded slow motion scores are the highest slow motion values created by the non-human objects. It is expected not to have fast motion detection during the recording time. For this implementation to work, there are three main timelines as shown in the Figure 77. This picture can not be seen directly in exploration tool GUI, but was used in the algorithm development.

A person was inside of the room or inside the sensor coverage. The fluctuations of slow motion score will not be recorded because of the fast motion detection. The recording time will start when there is no fast motion.

The room is empty, but slow motion score has not settled due to the low pass filtering in the presence detector. The slow motion score will not be recorded. This time window, \(w_h\), is dependent on the the

slow_motion_deviation_time_const, \(\tau\).The last time window, \(w_\text{cal}\), is the actual recording step of the slow motion score, which later will be set as a new threshold. The B and C time windows are combined in the \(w_g\), controlled by the

fast_guard_s. We have the following:

Figure 77 Implementation of adaptive_threshold#

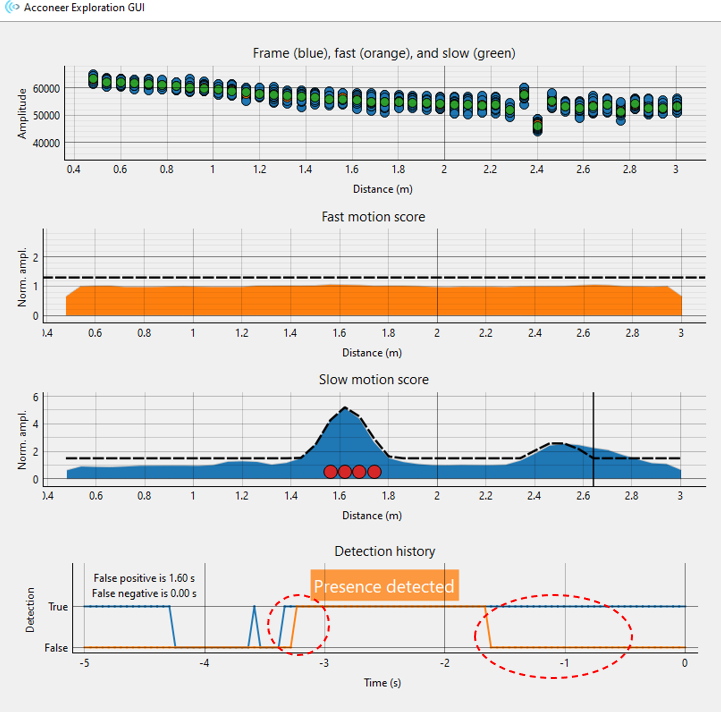

The non-human object, can in some cases create motion that will be detected as presence. The false detections, such as false negative and false positive, marked with red circles on Figure 78, are caused by different results from slow motion detection and fast motion detection.

By activating the adaptive threshold, it will automatically change the threshold over distances to a threshold value that is higher than the measured slow motion detection. The Figure 78 on the third plot represents the threshold for the case of plant in the room after fast motion guard periods completed.

Figure 78 UI appearance#

After the fast guard window, the slow motion threshold will not have equal values for all distances any longer. The slow motion detection, \(p_s\), is then redefined as comparing the depthwise slow motion score, \(\bar{s}_\text{slow}(f, d)\), to the recorded depthwise slow motion threshold \(\bar{v}_s(d)\).

Fast motion outlier detection#

In fast motion detection, humans will be detected if they create enough fast motion. If a person is sitting still, it will create a very low spike. Due to the consistency of the fast motion score, this low spike can be detected in the fast motion detection even though it is not above the threshold value. The fast motion outliers detection has the purpose of detecting this relatively short and weak fast motion. It records previous frames of fast motion score, \(s_\text{fast}(f)\). The recorded values are then sorted from least to greatest, \(\bar{u}\). The \(1^{st}\) quartile and \(3^{rd}\) quartile are calculated with percentile based formula. With \(n_s\) being the number of recorded values we have:

The interquartile range, \(IQR\), for fast motion score is calculated to determine the boundaries for the outlier detection. Based on our investigation, the human creates an \(IQR\) value above 0.15, hence, the IQR is defined to never exceed this value. The lower boundary, \(L_b\), and the upper boundary, \(U_b\), are then calculated from the \(IQR\) and the \(Q_\text{factor}\). The \(Q_\text{factor}\) adjusts the boundaries and sets the sensitivity in the outlier detection. The more fluctuative fast motion score in an empty room without human is, the higher the \(Q_\text{factor}\) should be. In our investigation, the optimal value of \(Q_\text{factor}\) is 3 to differentiate between a human and other objects. Finally, the fast motion detection, \(p_f\), will also be defined as the fast motion score being outside the boundaries.

Note

Some configurations here in Presence detection human only, are exactly the same as in the Presence detection (sparse) only with name adaptation.

slow_motion_hf_cutoff is the same as inter_frame_fast_cutoff

slow_motion_lf_cutoff is the same as inter_frame_slow_cutoff

slow_motion_deviation_time_const is the same as inter_frame_deviation_time_const

fast_motion_time_const is the same as intra_frame_time_const

Graphical overview#

Configuration parameters#

- class acconeer.exptool.a111.algo.presence_detect_human_only.ProcessingConfiguration#

- slow_motion_threshold#

Level at which the slow motion detection is considered as “Slow motion”.

Type: floatDefault value: 2

- slow_motion_hf_cutoff#

Cutoff frequency of the low pass filter for the fast filtered sweep mean. No filtering is applied if the cutoff is set over half the frame rate (Nyquist limit).

Type: floatUnit: HzDefault value: 20.0

- slow_motion_lf_cutoff#

Cutoff frequency of the low pass filter for the slow filtered sweep mean.

Type: floatUnit: HzDefault value: 0.2

- slow_motion_deviation_time_const#

Time constant of the low pass filter for the (slow-motion) deviation between fast and slow.

Type: floatUnit: sDefault value: 3

- fast_motion_threshold#

Level at which the fast motion detection is considered as “Fast motion”.

Type: floatDefault value: 1.4

- fast_motion_time_const#

Time constant for the fast motion part.

Type: floatUnit: sDefault value: 0.5

- fast_motion_outlier#

Fast motion detection includes outlier detection to optimize detection in (i.e.) meeting room.

Type: boolDefault value: False

- num_removed_pc#

Sets the number of principal components removed in the PCA based noise reduction. Filters out static reflections. Setting to 0 (default) disables the PCA based noise reduction completely.

Type: intDefault value: 0

- show_data#

Show the plot of the current data frame along with the fast and slow filtered mean sweep (used in the slow-motion part).

Type: boolDefault value: True

- show_slow#

Show the slow motion plot.

Type: boolDefault value: True

- show_fast#

Show the fast motion plot.

Type: boolDefault value: True

- adaptive_threshold#

Changes the threshold array based on the slow and fast motion processing

Type: boolDefault value: True

- show_sectors#

- Type: boolDefault value: False

- fast_guard_s#

Recording time/period in seconds where there should be no fast motion in the recording period. It records the maximum slow motion threshold as a new threshold.

Type: floatDefault value: 160

- history_length_s#

- Type: floatUnit: sDefault value: 5